Meta trains AI systems using public posts from Facebook and Instagram users in the U.K., confirming its renewed efforts to advance AI development. This move comes after Meta’s previous pause due to regulatory concerns raised by the Information Commissioner’s Office (ICO). The company states that it has incorporated feedback from regulators and has adjusted its approach to make the process more transparent by introducing a revised “opt-out” system. According to Meta, this Meta trains AI strategy is focused on ensuring its generative AI models better reflect British culture, history, and language.

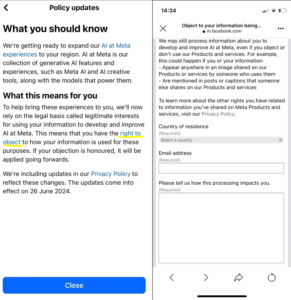

Starting from next week, U.K. users will begin receiving in-app notifications that will inform them about how their public content, such as posts and comments, will be utilized to train Meta’s AI. Users will have the opportunity to object if they do not wish for their data to be used, though Meta has emphasized that it will begin using data from users who do not actively opt out. Meta trains AI in this way to improve its systems, but the process has raised privacy concerns, particularly around the clarity of the opt-out option provided to users.

This announcement follows Meta’s decision three months ago to halt its plans in response to growing regulatory scrutiny. The ICO, along with the Irish Data Protection Commission (Meta’s primary privacy regulator in the European Union), expressed concerns over Meta’s use of U.K. user data for AI training. Privacy advocates have questioned whether Meta’s new opt-out system fully addresses these issues.

Unlike the European Union, where Meta faces stricter regulations under the General Data Protection Regulation (GDPR), the U.K.’s departure from the EU provides Meta with a slightly more lenient regulatory environment. However, Meta’s use of data to train AI is still subject to the U.K.’s Data Protection Act, which closely mirrors the GDPR.

In the past, Meta has attempted to rely on a legal basis called “legitimate interest” (LI) to justify its data processing methods, arguing that it complies with privacy laws. However, experts and courts have questioned whether LI is a suitable legal foundation for such widespread use of personal data, especially for AI training. Last year, the Court of Justice of the European Union ruled against Meta’s reliance on LI for targeted advertising, casting doubts on whether it can successfully use this legal argument for training AI systems.

Opt-out objections

Image Credits: Meta / Screenshot

While Meta has adjusted its processes to address concerns, including simplifying its objection form for users who wish to opt out, critics argue that the changes are not sufficient. Privacy rights organizations, such as noyb, have filed complaints across several EU countries, arguing that Meta’s opt-out approach forces users to take unnecessary actions to protect their privacy, rather than requiring Meta to obtain explicit consent upfront.

Meta’s decision to restart AI training in the U.K., rather than the EU, underscores the challenges it faces with European privacy regulations. In other regions, like the U.S., the company has been expanding its AI capabilities by using public content, but the comprehensive privacy laws in Europe have limited these efforts. Despite the regulatory hurdles, Meta continues to emphasize the importance of using public posts to enhance its AI, claiming that the Meta trains AI initiative is necessary to ensure the technology can understand diverse cultural nuances and languages.

Also Read: WhatsApp’s New Feature: Chat Without Sharing Phone Number

Meta’s focus on restarting AI training in the U.K. signals its determination to proceed with AI development, despite the regulatory challenges it continues to face in Europe. The company maintains that it will work within the bounds of the law to ensure compliance, but the debate over privacy rights and data usage for AI training is far from settled.